The New Intelligence

Modern AI and the fundamental undoing of the scientific method

Obvious |

The days of traditional, human-driven problem solving—developing a hypothesis, uncovering principles, and testing that hypothesis through deduction, logic, and experimentation—may be coming to an end. A confluence of factors (large data sets, step-change infrastructure, algorithms, and computational resources) are moving us toward an entirely new type of discovery, one that sits far beyond the constraints of human-like logic or decision-making: driven solely by AI, rooted in radical empiricism. The implications—from how we celebrate scientific discovery to assigning moral responsibility to those discoveries—are far-reaching.

In 1962, mathematician I.J. Good predicted that a general intelligence machine would be built by 1978 that could tackle all of the most difficult problems remaining in science. In 1972, Cambridge Professor James Lighthill commented that “most workers in AI research and in related fields confess to a pronounced feeling of disappointment in what has been achieved in the past twenty-five years.” The subsequent 30 years would be filled with additional cycles of hype (springs) and disillusionment (winters) for the field of Artificial Intelligence.

For a technology that is over 50 years old, AI is having quite the comeback today. A renewed AI spring, driven by a confluence of infrastructural advancements, is now delivering real-world results. From smart personal assistants, to self-driving cars, to civilian monitoring, AI is fueling a broad transformation of many facets of our current society.

What’s most dramatic about this springtime, however, is something few have observed: AI today is advancing a fundamentally different type of intelligence, with extraordinary—and largely unknowable—implications.

In this light, AI’s return to prominence should not be thought of as a revival, but rather a reformulation.

Researchers are not only reapplying previously attempted methods for machine intelligence, but they are also drastically changing the philosophical approach towards defining intelligence to begin with. Exploring this shift in perspective can help contextualize the current AI spring within a broader technological past, explain the present momentum in the space, and offer some pressing questions about the future.

Early AI: First Principles and Deductive Logic

The simple vision of a machine emulating any level of human-like logic or decision making has been given many names—including automaton, machine intelligence, and artificial intelligence. With the invention of computational hardware and associated programming languages in the 1940s, the research community first began taking steps towards realizing the promise of AI.

The first approach to AI mirrored the precedent of intelligence set by mathematicians and scientists, famed for the “Eureka!” moment where the underlying principles of a complex problem become clear.

Think of some of the scientific juggernauts in history: Copernicus, Einstein, Newton, Watson and Crick, Darwin, etc. These names represent the heroic, individual effort of the brilliant researcher: (1) thinking about a problem, (2) working to uncover the core principles, and (3) validating a hypothesis through deduction, logic, and experimentation. The early days of AI were driven by a similar type of deductive, computational logic. Again, with a focus on the programmer to codify static rules for a computer to follow.

Problems needed to be distilled down to ground rules based on a first principles understanding of the domain. Computers would then be tasked to follow a “if-this-then-that” logical progression to deduce the end result. This period of computer science was rooted in deductive reasoning: (1) understand a priori the underlying structure of a problem, (2) encode the decision logic in executable code, and (3) hand off to the computer to follow the static instructions and compute a result. The key assumption here: the world is guided by rules, which are interpreted and encoded first by human intelligence. The primary role of AI is to follow these encoded rules so that machines can reproduce the outcomes already mapped out by humans. Artificial Intelligence is a subset of human intelligence, a replica at best.

Automata mimicry.

Modern AI: Statistical Correlation and Radical Empiricism

In the past 20 years, some significant infrastructural developments have emerged that allow for a fundamentally different type of AI.

We now have access to large data sets from which to build statistical models (internet), along with the infrastructure to support mass amounts of data (cloud storage). Equipped with paradigms to compute over large data (MapReduce, Spark), statistical and Bayesian algorithms (research labs and universities), and computational resources to run iterative algorithms (Moore’s Law, GPUs, and increasingly custom FPGA/ASICs dedicated towards optimizing machine learning calculations), the modern Artificial Intelligence begins to emerge: one rooted in statistical correlation.

Statistical correlation is based on implementing learning models for a computer to apply to large datasets. These statistical models are shaped by empirical performance and represent a correlation-driven representation of historical learnings.

Statistical correlation is based on implementing learning models for a computer to apply to large datasets. These statistical models are shaped by empirical performance and represent a correlation-driven representation of historical learnings. We can now assume that given the right datasets, modern AI classifiers can generate high-performing and predictive models without any dependency on the underlying principles that govern a problem.

More simply, sheer empiricism—without a need for first principles or hypotheses. The progress and performance unlocked through this modern, Bayesian approach is exhilarating.

Data-driven AI can now be applied towards complex problem sets where humans have never been able to fully codify the solutions for an algorithm to mimic. Why not? In some cases, the human-known solutions are too nuanced and complex to translate to code (image recognition is one area: think about how many “rules” it would take to describe to a computer what a cat is).

In other cases, it’s because we haven’t even solved the problem to begin with. With modern machine learning and deep learning methods, a computer can build a high performance solution to a problem without exposing underlying principles or logic towards that solution. This creates a sharp rift between Artificial Intelligence and human intelligence—where the latter is not a prerequisite of the former, and the former does not necessarily advance the latter. Artificial Intelligence is now on a diverging path from human intelligence.

Automata obscura.

An Epistemological Undoing of Intelligence

The modern statistical approach towards AI is a fundamental undoing of the way that we think about problems and what it means to solve a problem. It is now as much about philosophical choices in the semantics of learning and knowing as it is about feature selection and classifier choice.

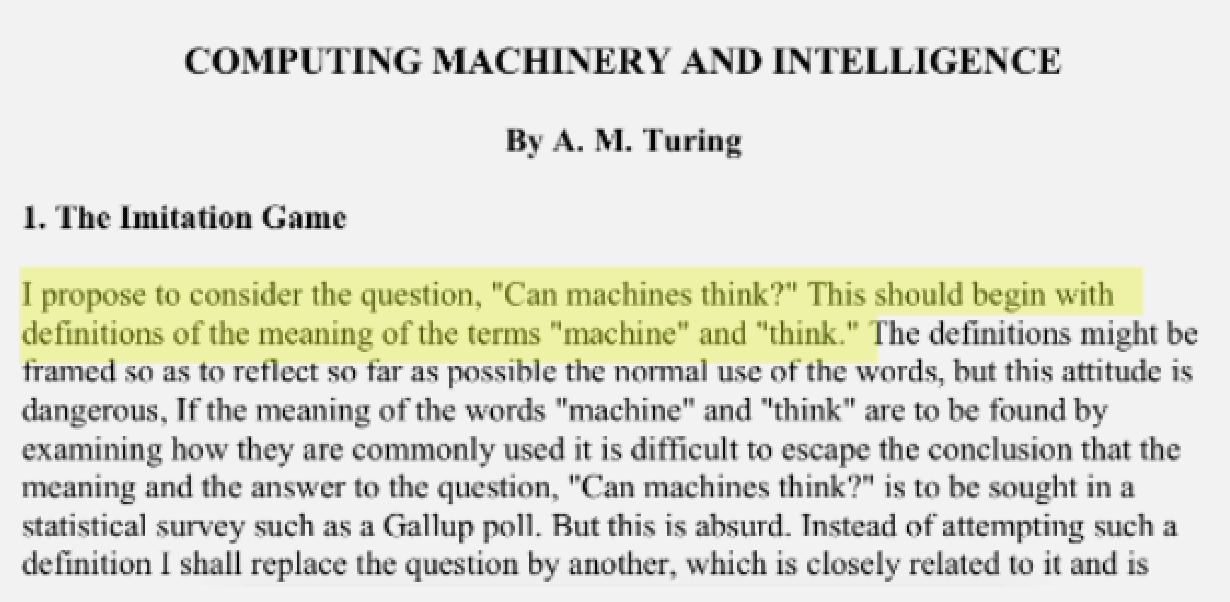

Alan Turing was already asking these epistemological (and existential) questions in 1950—in the very first sentence of his groundbreaking paper Computing Machinery and Intelligence, no less:

We must continue to consider Turing’s proposition. As modern statistical correlation approaches continue to yield useful models of complex problems, it is important to realize that this is a new and drastically different type of intelligence than the one sought after by computer scientists in the 1950s and 1960s.

This new type of intelligence does not necessarily map back to our own worldview and shed light on the problem at hand. This type of intelligence does not mimic the brilliance of the lone researcher during the moment of discovery, but is built on an obfuscated high-dimensional classification method trained on an unreadable mountain of data.

The Path Forward: Questions, not Answers

AI now has the exciting potential to advance fields where our own grasp of first principles is at best incomplete: biology, pharmaceuticals, genomics, autonomous vehicles, robotics, and more. Progress in these disciplines will, and already are, transforming our society in dramatic ways and at an accelerating pace.

As this technology continues to develop, it will also generate challenging questions around the key topics of attribution and explainability.

Sovereignty

In April 2018, researchers from the University of Maryland applied machine learning techniques to predict the behavior of chaotic systems governed by the Kuramoto-Sivashinsky equation. The AI tools used were not given any access to the equation nor information on the field of chaos theory at all, but was able to create a model that predicted the behavior of the system to a degree that has never been demonstrated before.

Modern AI often follows the Maryland team’s approach: a workflow built on top of curated data sets and increasingly commoditized classification methods.

Without the ownership of the lone scientist, how might we attribute and celebrate discovery? How might we attribute responsibility?

Explainability, Governance, and Move 37

The victory of the program AlphaGo over world champion Lee Sedol in 2016 was a watershed moment for AI. Championship level Go was long thought to be unattainable through computational intelligence because of the combinatoric complexity of the game.

In the early stages of the second match, AlphaGo made a move that was so unorthodox that Lee Sedol did not respond for 15 minutes— Move 37. Commentators during the match called the move a mistake, but some in the Go community have since studied the move and called it “beautiful”. Because AI is built on top of statistical correlation and empiricism, it can be difficult to “explain” (read: reverse engineer first principles and ground truth) results. These systems are open for interpretation because the native language of intelligence is not one that humans necessarily understand. The debate about the actual strategy, reasoning, and logic of Move 37 continues on today.

If we change the above case study slightly, from evaluating an AI-generated Go move, to evaluating an AI-generated New Chemical Entity submission to the FDA—it’s clear that there will be many dilemmas ahead in adjusting to this new intelligence.

We are still in the dawn of a new era in Artificial Intelligence, and Intelligence itself. The velocity of publications about and breakthroughs of this technology is rivaled only by the velocity of moral and epistemological questions that are generated as well. Explainability and Governance will play a critical role in the acceptance of modern AI solutions, especially in regulated industries. How might we interpret the solutions offered and overlay them back to our ontologies of the world?