Generative AI That Saves Lives

Bringing Generative AI into the healthcare stack can deliver extraordinary possibilities

Kahini Shah |

Let’s start with the basics. Oversimplified, machine learning (ML) tasks can be broken down into three categories of capability:

- Worse: Not as good as a human

- Good: As good as a human

- Great: Better than a human

More specifically, a new class of ML for language—Large Language Models (LLMs)—has pushed the state of the art from #1 to #2. While this breakthrough has exciting implications across all industries, few are more compelling than healthcare.

Stepping back, ML for language has a complicated history, with much hype and little to back it up. Many of the early implementations don’t even exist today (remember Facebook Assistant M?). Even the ones that did survive had limited and unreliable functionality. In 2015, the most sophisticated thing my team at Booz Allen Hamilton was able to teach Alexa was how to check-in conference attendees using a registration code. Improvements to language processing sped up dramatically when Google Brain introduced the transformer architecture to deep learning (DL) in 2017, immediately transforming the industry.

Ever since, there has been a steady stream of breakthroughs in the performance and applications of ML on natural language, driven by better training and billions more parameters. But the computers and energy for this isn’t cheap. The training might cost several million dollars, which has sidelined all but the best-funded teams. Despite the resource constraints, new technology is emerging that helps teams do more with less, such as Stable Diffusion.

Many of these LLMs can perform a variety of functions—translation, question answering, and generating text content—with relatively little task-specific training, even outperforming models built for the specific tasks. They can also be extended in surprising ways (look no further than DALL-E 2 ). The use of large transformer models is furthering the state of the art in other fields, like AlphaFold predicting 3D protein structures, which is important for predicting molecular interactions and identifying drug delivery pathways.

How can we harness this new tech and upgrade from #1 to #2—from not as good as a human to as good as a human—where it matters most? While there are endless possibilities, I believe one untapped opportunity is in healthcare, specifically with language. Here’s how:

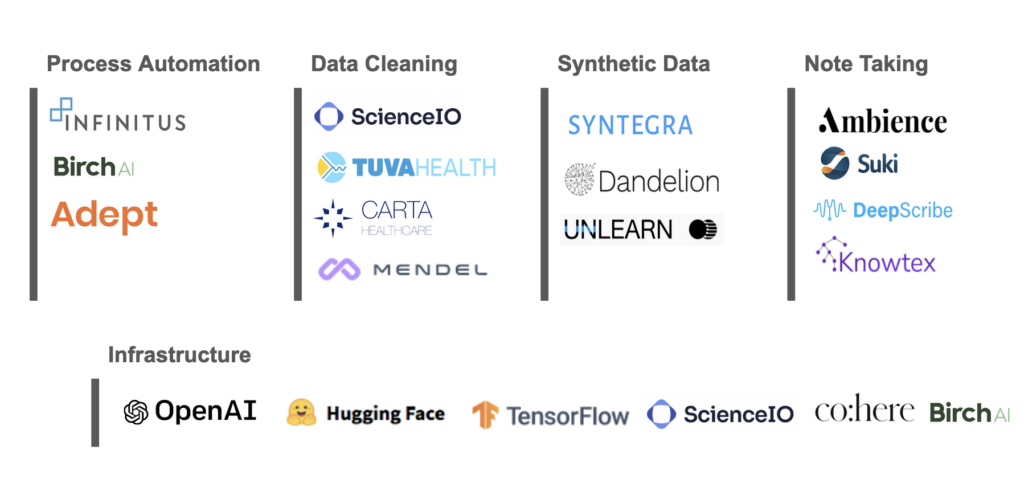

Note-Taking: Physician burnout is a national problem. Almost 20% of doctors plan to stop practicing within the next two years. Many of the problems cited as reasons to leave aren’t related to the content of the work, but with how physicians’ jobs are done. Many surveyed say they don’t enjoy the amount of paperwork. Physicians apparently spend about 35% of their time documenting patient data, leaving less time for patient care, especially during consultations. LLMs can help speed up the note taking process with things like better autocomplete. They could also enable passive listening systems to record the content of the conversation in an exam room and translate or generate a note automatically, similar to what a human might do.

Process Automation: Healthcare has not broadly digitized processes. Sharing lab results still occurs via fax machine, getting prior approval for medical procedures is done over the phone, and sometimes a patient must carry CDs of medical imaging to a different doctor. These outdated modes slow patient care and drive costs. A McKinsey report estimated that a quarter of healthcare costs, equaling about $1 trillion, come from administrative burden alone. Using domain specific models can help automate rote processes, workflows, and phone calls. These models could be used in a clinical setting, such as helping doctors quickly triage, explain, and create follow-up actions.

Data Cleaning: Nearly 90% of the data that an organization generates is unstructured or semi structured (text, video, audio, images, web server logs, etc). In health care, unstructured data comes from a variety of different sources including electronic health records, physician notes, lab results, images, etc. Formats and naming conventions vary from system to system. To make this information usable for treatment, population level insights, or physician decision support, it first needs to be structured.

Synthetic Data: One limitation of AI models is that they are only as good as training data. In healthcare, Protected Health Information rules limit the sharing of data. One way to get around this is to use synthetic data that has the same statistical properties as real data but isn’t actual patient data. This data can be used to train and test AI models. Synthetic data has been found to be useful in clinical trials by simulating control populations. These “digital twins” save time and money that would otherwise go into recruiting real subjects.

Every leap forward in technology comes with its challenges and unknowns. Explaining how neural networks work has always been tough. The inner workings of these LLMs seem to be even harder to explain than older model types. Stanford recently announced the Holistic Evaluation of Language Models (HELM) project to benchmark and bring transparency to LLMs, a step in the right direction. For now, experiencing the magic might be easier—and maybe more enjoyable—than figuring out the trick.

If you’re an entrepreneur working in this space or just want to connect, please reach out: kahini@obviousventures.com